LLaVA-NeXT: Tackling Multi-image, Video, and 3D in Large Multimodal Models

Table of Contents

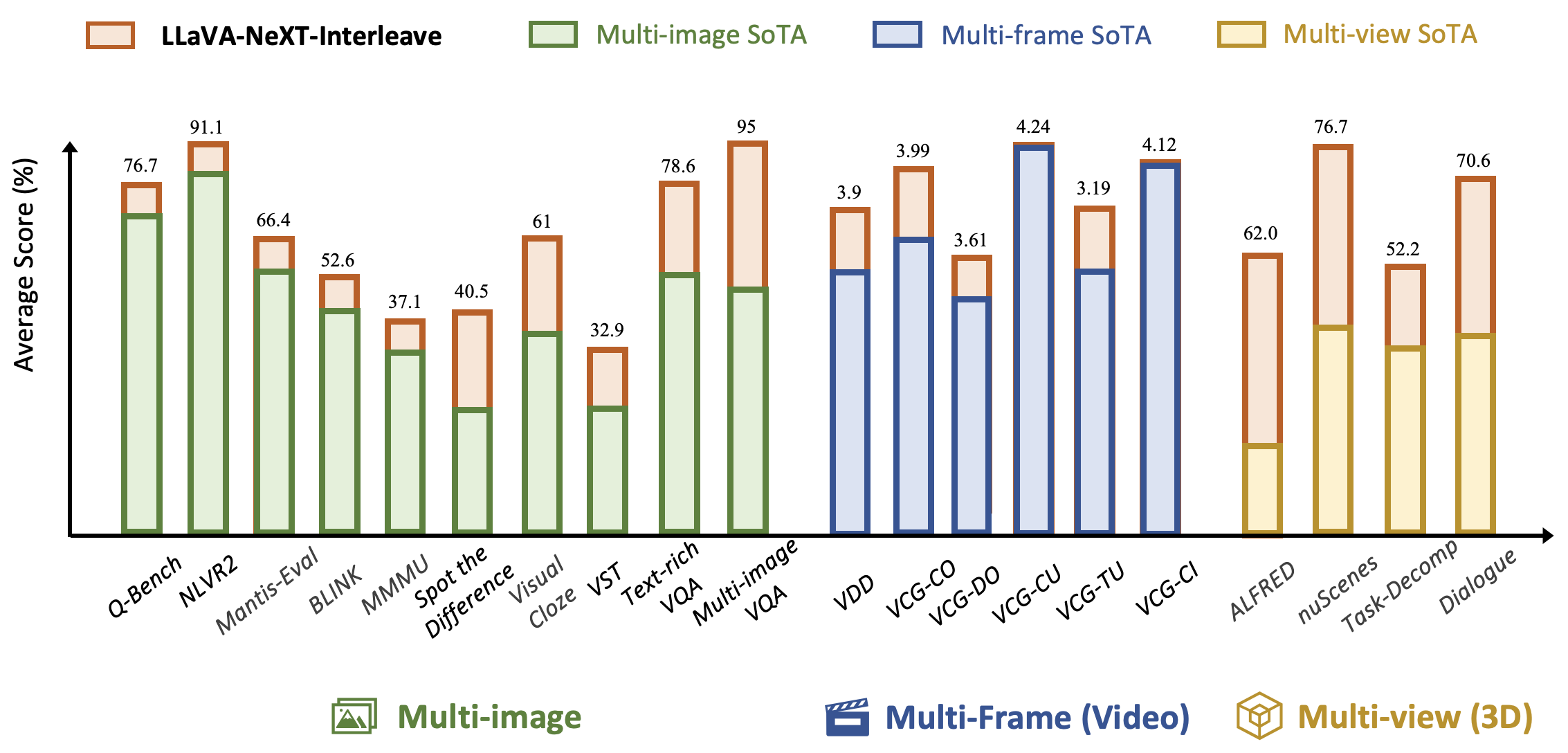

Recent advancements in Large Multimodal Models (LMMs) have showcased impressive capabilities in multimodal understanding and reasoning. However, most existing open-source LMMs such as LLaVA-NeXT have primarily focused on pushing the performance limit of single-image, leaving the potential of multi-image scenarios largely unexplored. Considering the diverse range of computer vision scenarios and data formats—including single and multi-image inputs, videos, and 3D data—there is a pressing need to develop methodologies for open LMMs that can operate effectively across these varied contexts. We observe that the image-text interleaved format can naturally serve as a general data template to unify different scenarios, e.g., single-image or multi-image as special cases, video as multi-frames, and 3D as multi-views. Therefore, we present LLaVA-NeXT-Interleave, an all-around LMM that extends the model capabilities to new real-world settings: Multi-image, Multi-frame (videos), Multi-view (3D) and maintains the performance of the Multi-patch (single-image) scenarios. We denote the four settings as M4.

Highlights:

- Interleave data format unifies different tasks. We represent multi-image, video, 3D, and single-image data all into an interleaved training format, which unifies different tasks in a single LLM.

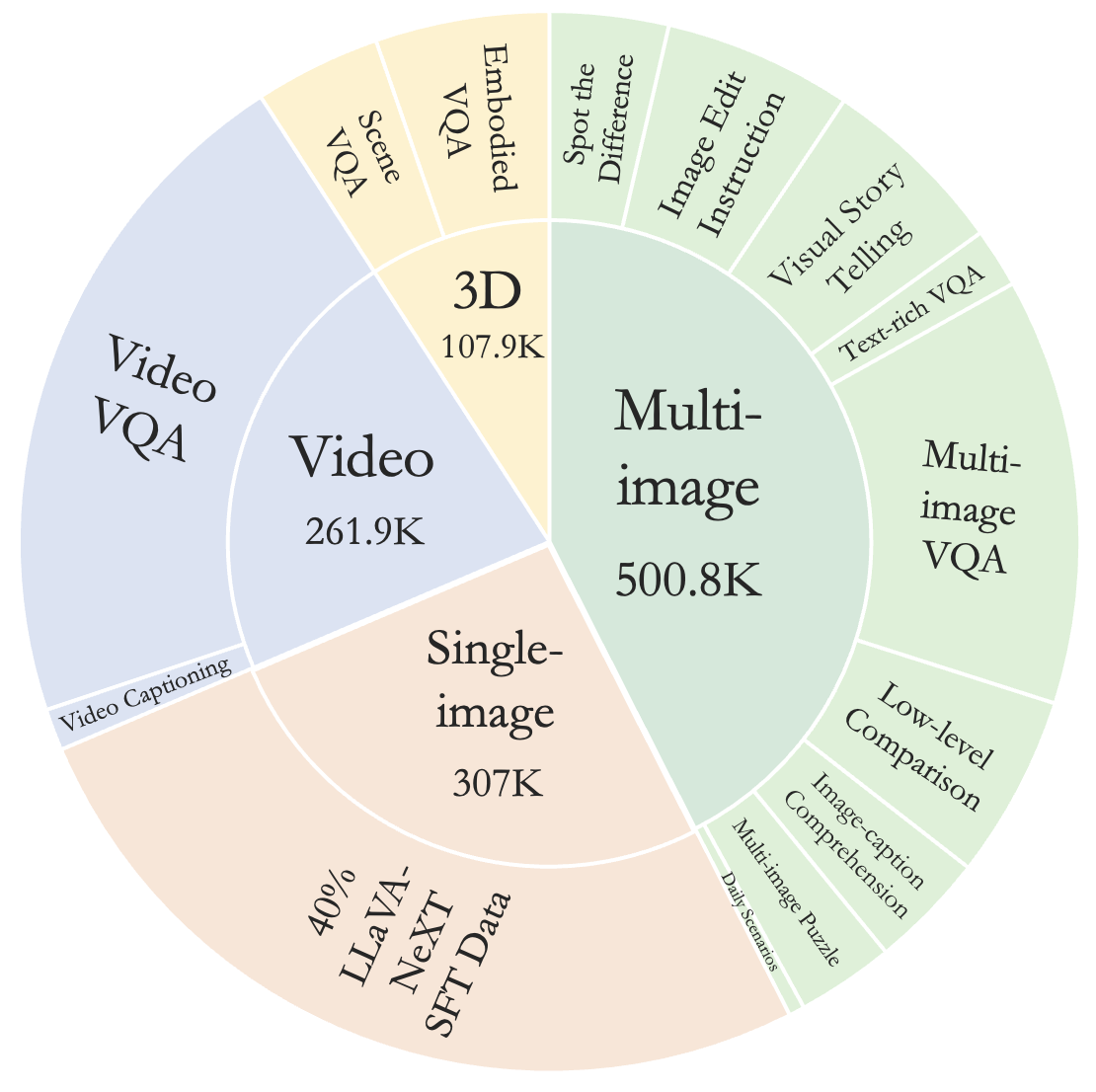

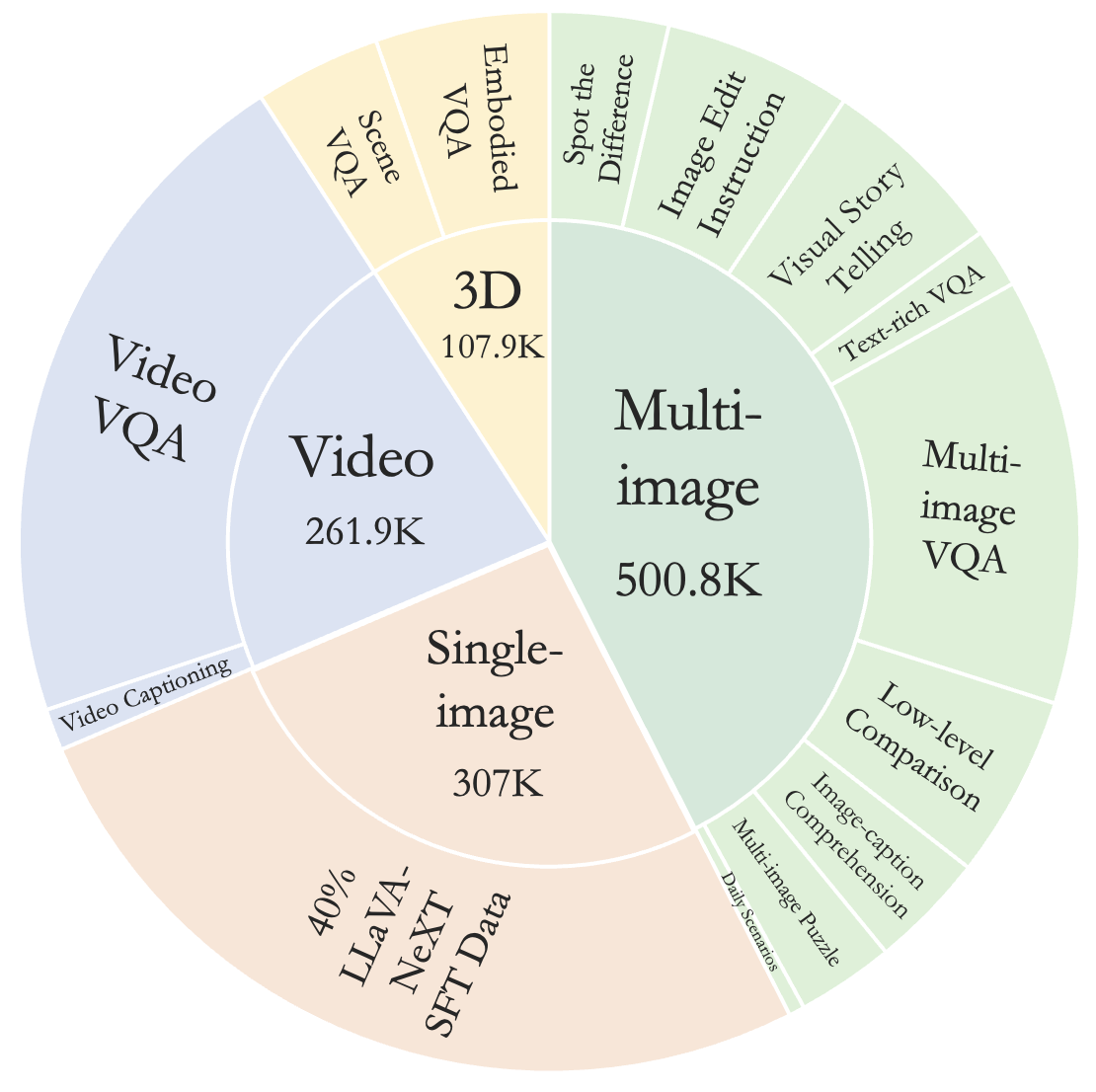

- New datasets: (1) Training Data: M4-Instruct. We compile a high-quality dataset with 1177.6k samples, spanning 4 primary domains (multi-image, video, 3D, and single-image). (2). LLaVA-Interleave Bench. We curate a diverse set of tasks to evaluate the multi-image capabilities in 3 scenarios, including 9 newly collected and 13 existing in/out-domain benchmarks.

- SoTA Performance.(1). With a single model, LLaVA-NeXT-Interleave can achieve leading results in different multi-image benchmarks compared to the previous SoTA. (2). With a proper data mix of different scenarios, the performance of previous individual tasks can be improved or maintained. For example, we maintain the single-image performance of LLaVA-NeXT and improve performance on video tasks.

- Emerging capabilities with cross-task transfer. By jointly training on a diverse set of visual data modalities, the model shows emerging capabilities to transfer tasks between different scenarios.

Section 1 - Emerging Capabilities

Task Transfer between Single-Image and Multi-Image

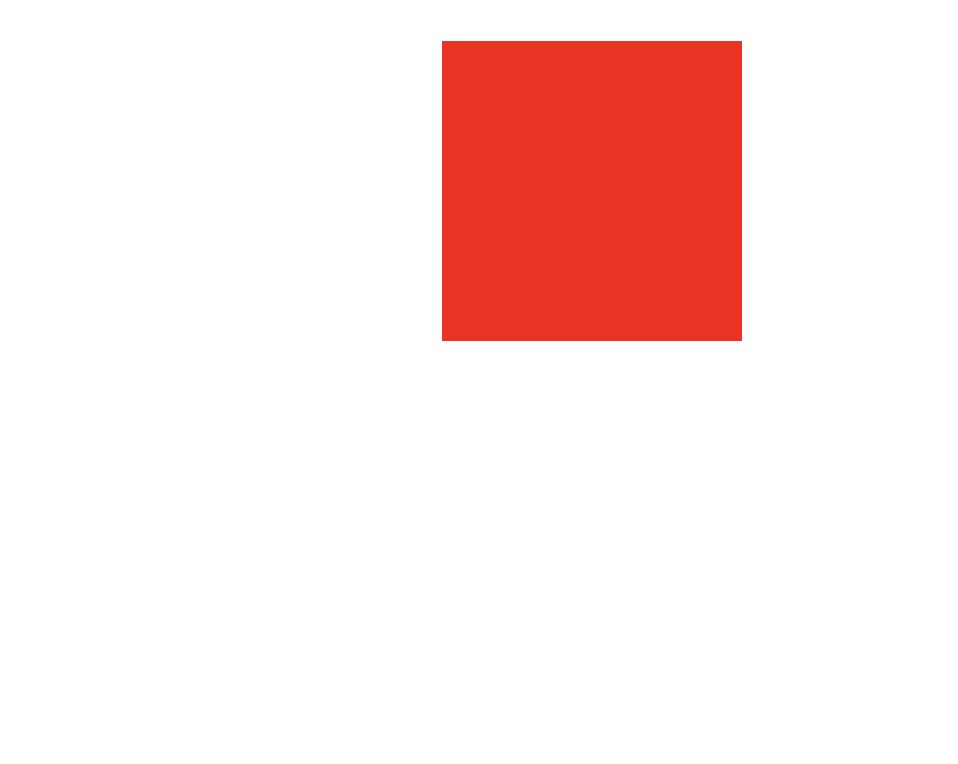

- Multi-image coding: Our multi-image training data does not include the coding-related problem. However, by jointly training on single-image data with coding problems, the model learns to write code based on multiple images. In this example, the model writes an HTML code to simulate the movement of the square from the first image to the second image.

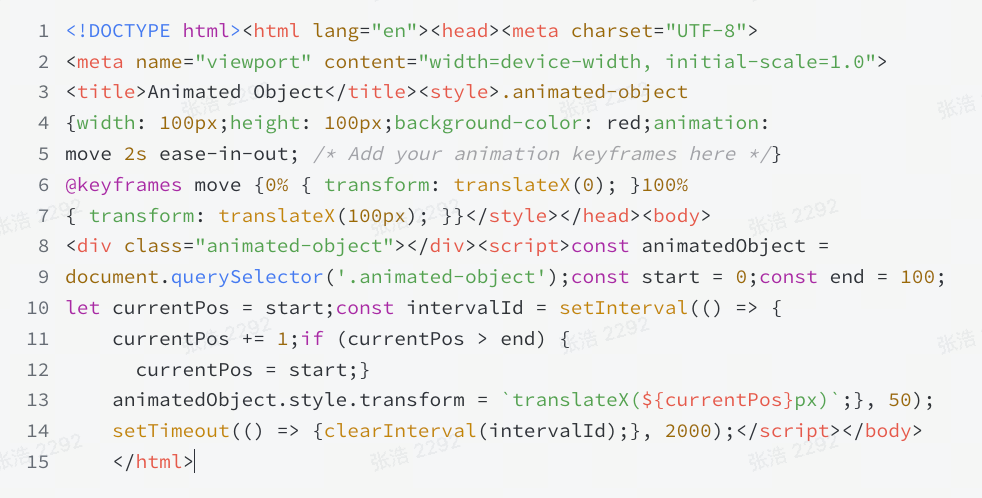

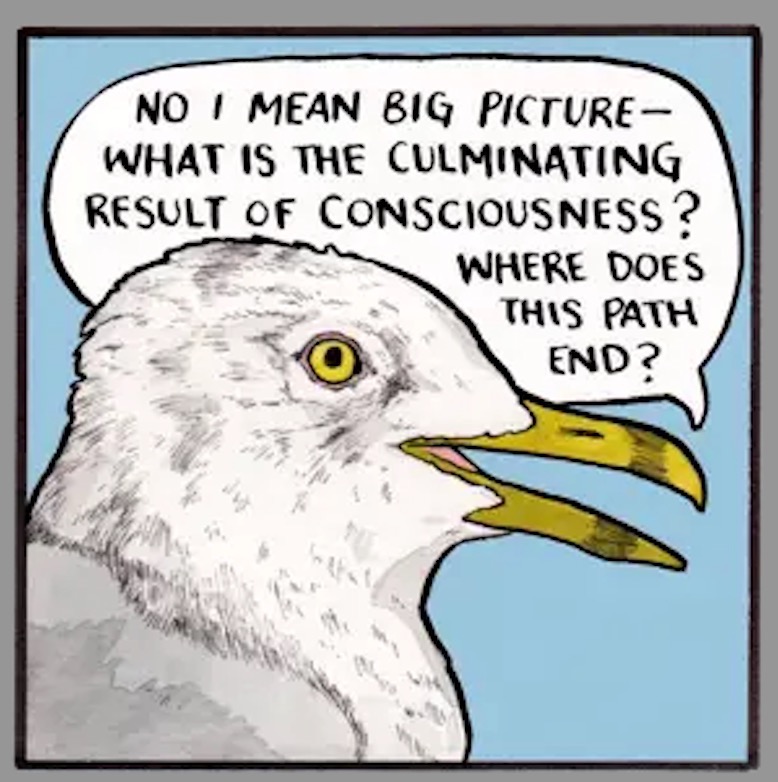

- What is fun about the images: The model learns to reason over mutliple images and tell the funny part of the images. It is only in single-image tasks but not in multi-image tasks.

Task Transfer between Image and Video

- Multi-video difference: All the video data trained is about single-video QA or caption. Tell-the-difference data only appears in multi-image data, and the difference is only about the difference between two single images. By joint training, the model generalizes to tell the difference of two videos. In this example, viewing two similar videos of different styles, the model can tell the general difference and list the detailed differences.

- Twitter post for video: The model is trained to write a Twitter post on multi-image data. By joint training, the model can write a Twitter post of a video.

Real-World Applications

- PPT summarization and QA: The model can summarize and retrieve information from multiple images.

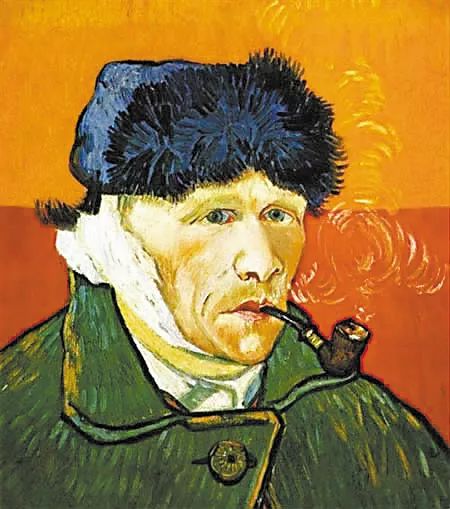

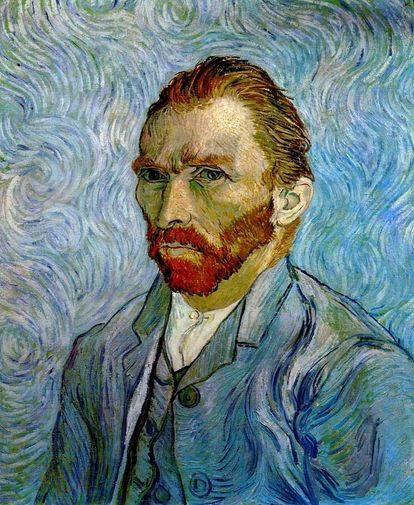

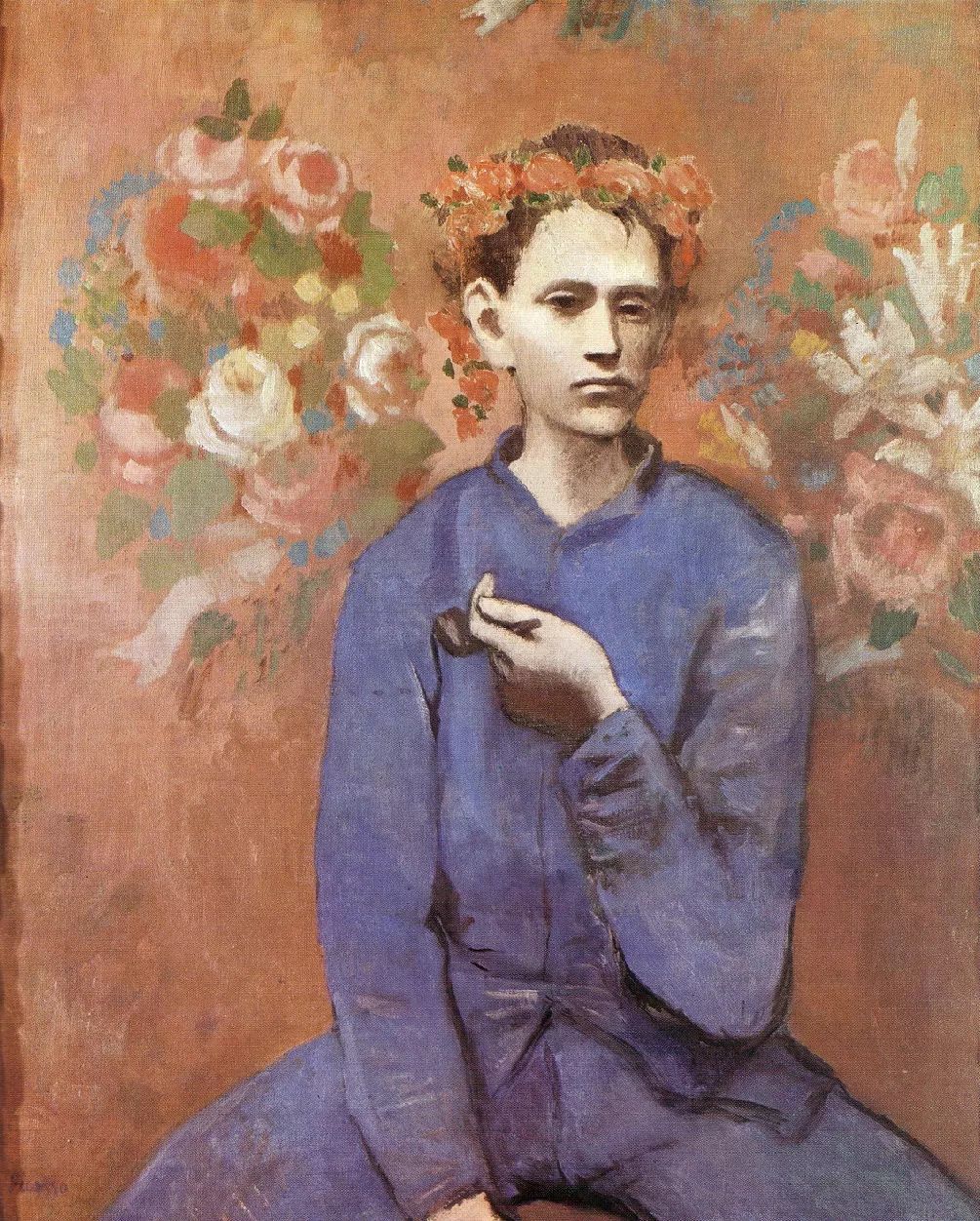

- Painting styles/Category difference: The model generalizes to recognize artists' painting styles and tell different categories.

- Image editing: The model can be used to create image editing prompts for image generation.

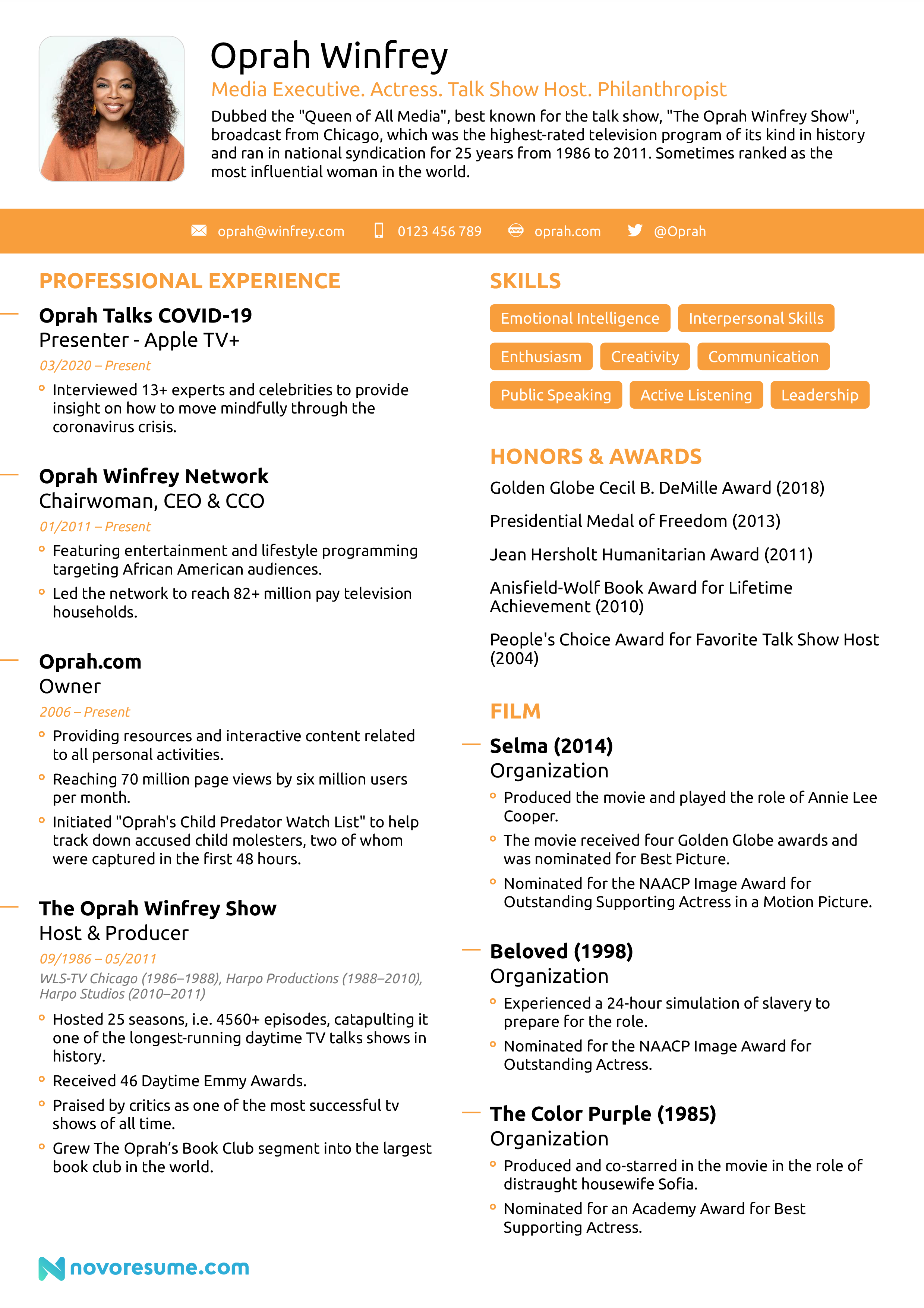

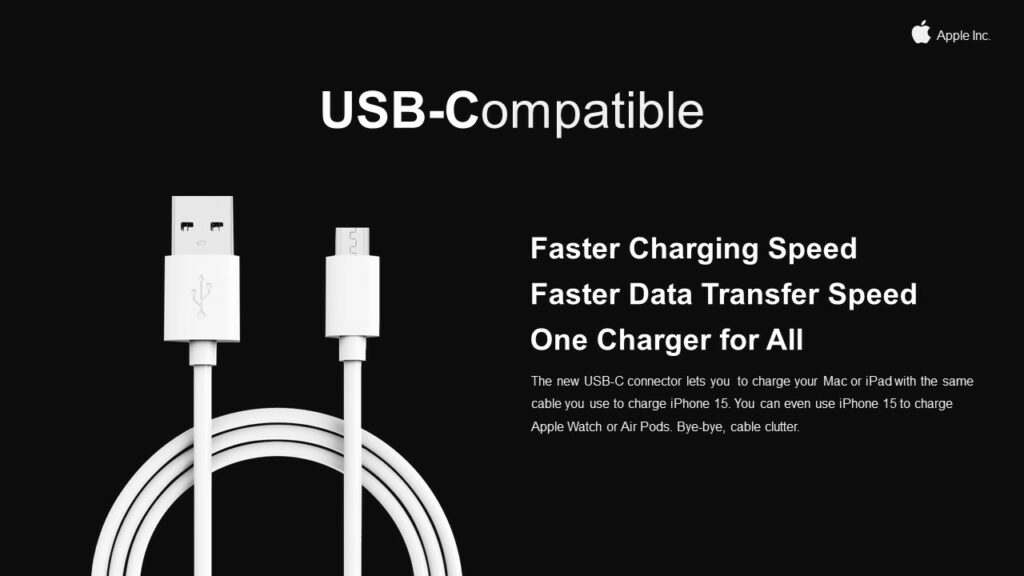

- Multi-doc QA: The model can summarize the information of multiple documents and provide comparisons for different documents.

Section 2 - Interleaved Visual Instruction Tuning

Task Overview. We utilize interleave format to unify different tasks of data input, including the following four settings:

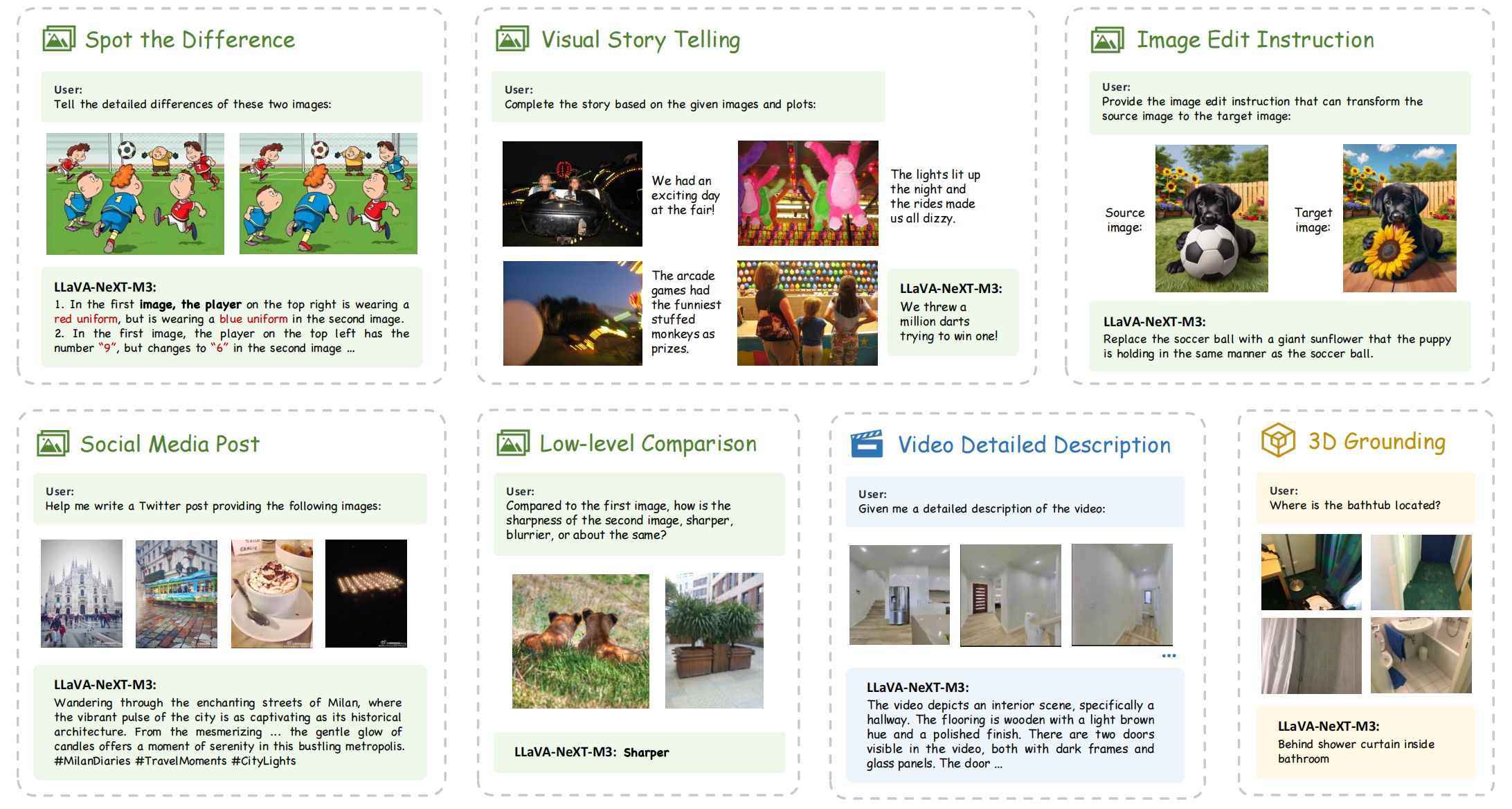

- Multi-image scenarios include instructions with single or multiple images, and interleaved text-image input. This setting covers 12 challenging real-world tasks included in our training data, such as spotting the difference, visual storytelling, image editing instruction generation, interleaved multi-image dialogue, multi-image puzzle, low-level multi-image assessment, etc.

- Multi-frame scenarios refer to taking as input the video data by sampling it into multiple frames, preserving temporal cues across the multi-image sequence. The video-based tasks mainly focus on 2 tasks: video detailed captioning and video VQA.

- Multi-view scenarios represent 3D environments by multi-view images from different perspectives, where the visual correspondence and disparity can indicate spatial information in the 3D world. For 3D perception, we include 2 tasks: embodied VQA (dialogue and planning), and 3D scene VQA (captioning and grounding).

- Multi-patch represents the single-image scenario. This is because the design of AnyRes in LLaVA-NeXT allows us to divide a single image into multiple patches, compatible with the interleaved format. This is used to maintain single-image performance and accommodate cross-task transfer.

M4-Instruct: Training Data

To empower all-round multi-image capabilities, we curate a comprehensive dataset including 1177.6K training samples, M4-Instruct, spanning multi-image, multi-frame, and multi-view scenarios with 14 tasks and 41 datasets, along with single-image data to preserve basic instruction-following capabilities. We exhibit the detailed data statistics in the table below.

Most multi-image datasets are collected from previous public efforts and rigorously converted into our data format, referring to DEMON and Mantis. On top of that, we also utilize GPT-4V to annotate 3 new tasks to enable more diverse capabilities, i.e., Real-world Difference, Synthetic Difference, and Twitter Post.

For video data, we utilize a subset of 255k from LLaVA-Hound, including 240k video QA and 15k video captions. We also include NExT-QA and STAR in our training.

For 3D data, we utilize 3D data from Nuscenes QA, ALFRED, ScanQA, and 3D-LLM.

For single-image data, we use 40% of original LLaVA-NeXT single-image data.

[Fold / Unfold to See the Details of Data Statistics]

Task

Dataset

Scenario

# Samples

Multi-image Scenarios

Spot the Difference(42.6K)

Real-world Difference

Realistic

6.7K

Synthetic Difference

Sythetic

7.0K

Spot-the-Diff

Surveilance

10.8K

Birds-to-Words

Birds

14.2K

CLEVR-Change

Solids

3.9K

Image Edit Instruction(67.7K)

HQ-Edit

Sythentic

50K

MagicBrush

Realistic

14.2K

IEdit

Realistic

3.5K

Visual Story Telling(67.5K)

AESOP

Cartoon

6.9K

FlintstonesSV

Cartoon

22.3K

PororoSV

Cartoon

12.3K

VIST

Realistic

26K

Text-rich VQA(21.3K)

WebQA

Webpage

9.3K

TQA

Textbook

8.2K

OCR-VQA

OCR

1.9K

DocVQA

Document

1.9K

Multi-image VQA(153.3K)

NLVR2

Realistic

86.4K

MIT-States_StateCoherence

General

1.9K

MIT-States_PropertyCoherence

General

1.9K

RecipeQA_ImageCoherence

Recipe

8.7K

VISION

Industrial

9.9K

Multi-VQA

General

5K

IconQA

General

34.6K

VizWiz

General

4.9K

Low-level Comparison(65.9K)

Coinstruct

Low-level

50K

Dreamsim

Low-level

15.9K

Image-caption Comprehension (41.8K)

ImageCoDe

General

16.6K

Contrast-Caption

General

25.2K

Daily Scenarios (5.7K)

MMChat_Twitter_Post

General

5.7K

Multi-image Puzzle (35K)

Raven

Abstract

35K

Multi-frame (Video) Scenarios

Video QA(246.9K)

NExT-QA

General

3.9K

STAR

General

3K

ShareGPTVideo-VQA

General

240K

Video Detailed Captioning (15K)

ShareGPTVideo-Caption

General

15K

Multi-view (3D) Scenarios

Scene VQA(45.4K)

Nuscenes

Outdoor

9.8K

ScanQA

Indoor Realistic

25.6K

3D-LLM-Scene

Indoor Realistic

10K

Embodied VQA(62.5K)

ALFRED

Indoor Synthetic

22.6K

3D-LLM-Dialogue

Indoor Realistic

20K

3D-LLM-Planning

Indoor Realistic

19.9K

Single-image Scenarios

Single-image Tasks(307K)

Randomly sampling 40% SFT data of LLaVA-NeXT General

307K

To empower all-round multi-image capabilities, we curate a comprehensive dataset including 1177.6K training samples, M4-Instruct, spanning multi-image, multi-frame, and multi-view scenarios with 14 tasks and 41 datasets, along with single-image data to preserve basic instruction-following capabilities. We exhibit the detailed data statistics in the table below.

Most multi-image datasets are collected from previous public efforts and rigorously converted into our data format, referring to DEMON and Mantis. On top of that, we also utilize GPT-4V to annotate 3 new tasks to enable more diverse capabilities, i.e., Real-world Difference, Synthetic Difference, and Twitter Post.

For video data, we utilize a subset of 255k from LLaVA-Hound, including 240k video QA and 15k video captions. We also include NExT-QA and STAR in our training.

For 3D data, we utilize 3D data from Nuscenes QA, ALFRED, ScanQA, and 3D-LLM.

For single-image data, we use 40% of original LLaVA-NeXT single-image data.

[Fold / Unfold to See the Details of Data Statistics]

| Task | Dataset | Scenario | # Samples |

|---|---|---|---|

| Multi-image Scenarios | |||

| Spot the Difference(42.6K) | Real-world Difference | Realistic | 6.7K |

| Synthetic Difference | Sythetic | 7.0K | |

| Spot-the-Diff | Surveilance | 10.8K | |

| Birds-to-Words | Birds | 14.2K | |

| CLEVR-Change | Solids | 3.9K | |

| Image Edit Instruction(67.7K) | HQ-Edit | Sythentic | 50K |

| MagicBrush | Realistic | 14.2K | |

| IEdit | Realistic | 3.5K | |

| Visual Story Telling(67.5K) | AESOP | Cartoon | 6.9K |

| FlintstonesSV | Cartoon | 22.3K | |

| PororoSV | Cartoon | 12.3K | |

| VIST | Realistic | 26K | |

| Text-rich VQA(21.3K) | WebQA | Webpage | 9.3K |

| TQA | Textbook | 8.2K | |

| OCR-VQA | OCR | 1.9K | |

| DocVQA | Document | 1.9K | |

| Multi-image VQA(153.3K) | NLVR2 | Realistic | 86.4K |

| MIT-States_StateCoherence | General | 1.9K | |

| MIT-States_PropertyCoherence | General | 1.9K | |

| RecipeQA_ImageCoherence | Recipe | 8.7K | |

| VISION | Industrial | 9.9K | |

| Multi-VQA | General | 5K | |

| IconQA | General | 34.6K | |

| VizWiz | General | 4.9K | |

| Low-level Comparison(65.9K) | Coinstruct | Low-level | 50K |

| Dreamsim | Low-level | 15.9K | |

| Image-caption Comprehension (41.8K) | ImageCoDe | General | 16.6K |

| Contrast-Caption | General | 25.2K | |

| Daily Scenarios (5.7K) | MMChat_Twitter_Post | General | 5.7K |

| Multi-image Puzzle (35K) | Raven | Abstract | 35K |

| Multi-frame (Video) Scenarios | |||

| Video QA(246.9K) | NExT-QA | General | 3.9K |

| STAR | General | 3K | |

| ShareGPTVideo-VQA | General | 240K | |

| Video Detailed Captioning (15K) | ShareGPTVideo-Caption | General | 15K |

| Multi-view (3D) Scenarios | |||

| Scene VQA(45.4K) | Nuscenes | Outdoor | 9.8K |

| ScanQA | Indoor Realistic | 25.6K | |

| 3D-LLM-Scene | Indoor Realistic | 10K | |

| Embodied VQA(62.5K) | ALFRED | Indoor Synthetic | 22.6K |

| 3D-LLM-Dialogue | Indoor Realistic | 20K | |

| 3D-LLM-Planning | Indoor Realistic | 19.9K | |

| Single-image Scenarios | |||

| Single-image Tasks(307K) | General | 307K | |

Task Examples. We provide examples to illustrate the multi-image/fame/view tasks of M4-Instruct dataset.

[Fold / Unfold to see task overview]

LLaVA-Interleave Bench

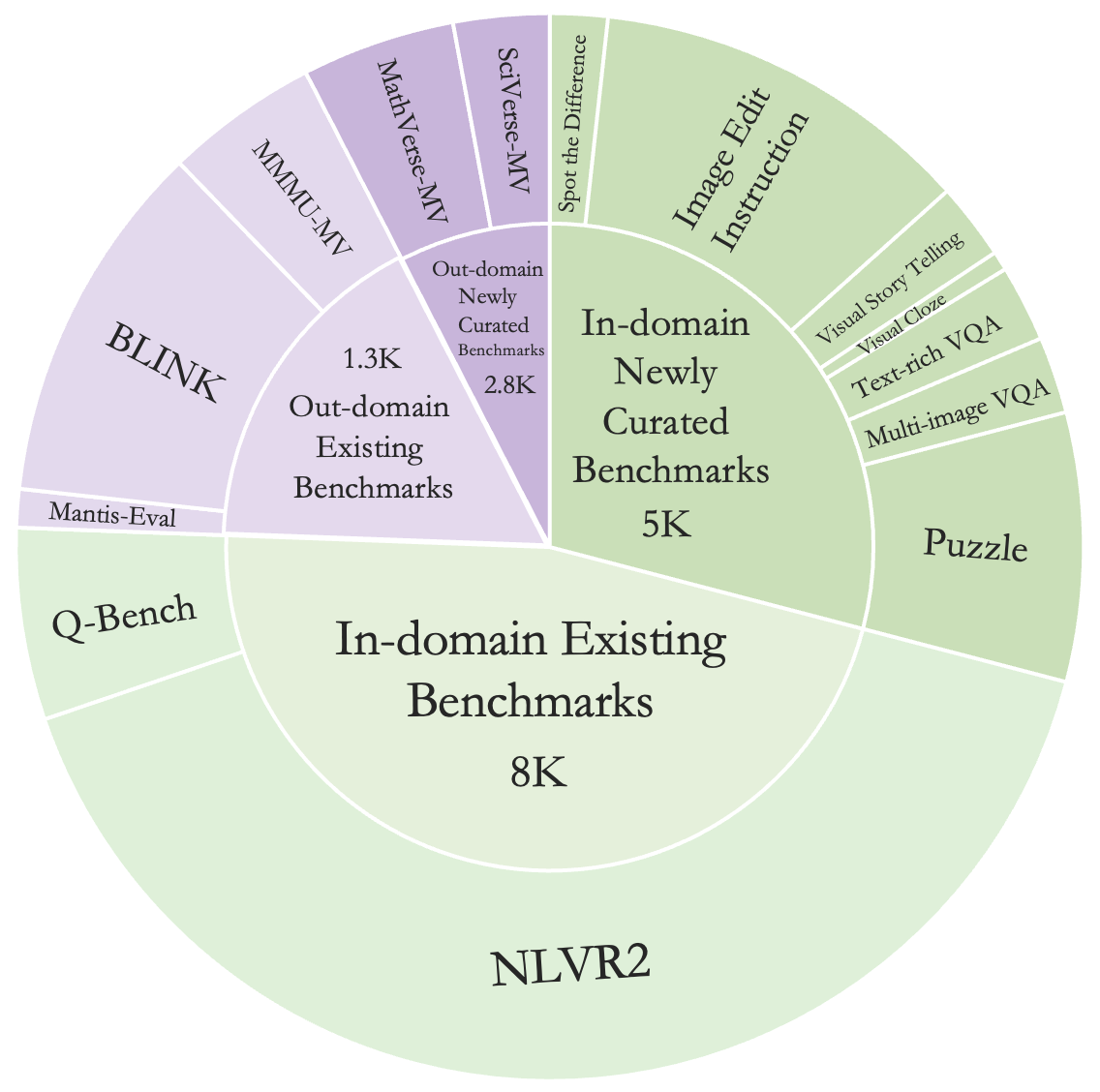

We categorize multi-image reasoning task into two classes:

(1) In-domain Evaluation includes tasks that have been "seen" during training, designed to verify the model performance within familiar scenarios. We adopt 7 newly curated multi-image tasks corresponding to training datasets, and 2 existing benchmarks, Q-Bench and NLVR2, with 12.9K samples in total.

(2) Out-domain Evaluation involves tasks that do not overlap with the training scenarios, aiming to reveal the generalization capacity of LMMs. We construct 2 new tasks for multi-image mathematical and scientific comprehension, and utilize 3 existing benchmarks, Mantis-Eval, BLINK, and MMMU, with 4.1K samples in total.

[Fold / Unfold to See Table for detailed multi-image benchmark statistics]

| Task | Dataset | Scenario | # Samples |

|---|---|---|---|

| In-domain Evaluation - Newly Curated Benchmarks | |||

| Spot the Difference(0.3K) | Spot-the-Diff | Surveilance | 0.1K |

| Birds-to-Words | Birds | 0.1K | |

| CLEVR-Change | Solids | 0.1K | |

| Image Edit Instruction(2K) | HQ-Edit | Sythentic | 1K |

| MagicBrush | Realistic | 0.9K | |

| IEdit | Realistic | 0.1K | |

| Visual Story Telling(0.4K) | AESOP | Cartoon | 0.1K |

| FlintstonesSV | Cartoon | 0.1K | |

| PororoSV | Cartoon | 0.1K | |

| VIST | Realistic | 0.1K | |

| Text-rich VQA(0.4K) | WebQA | Webpage | 0.1K |

| TQA | Textbook | 0.1K | |

| OCR-VQA | OCR | 0.1K | |

| DocVQA | Document | 0.1K | |

| Multi-image VQA(0.4K) | MIT-States_StateCoherence | General | 0.1K |

| MIT-States_PropertyCoherence | General | 0.1K | |

| RecipeQA_ImageCoherence | Recipe | 0.1K | |

| VISION | Industrial | 0.1K | |

| Puzzle (1.4K) | Raven | Abstract | 1.4K |

| In-domain Evaluation - Existing Benchmarks | |||

| NLVR2 (7K) | NLVR2 | Realistic | 7K |

| Q-Bench (1K) | Q-Bench | Low-level | 1K |

| Out-domain Evaluation - Newly Curated Benchmarks | |||

| MathVerse-mv (0.8K) | MathVerse | Math Diagram | 0.8K |

| SciVerse-mv (0.4K) | SciVerse | Scientific Diagram | 0.4K |

| Out-domain Evaluation - Existing Benchmarks | |||

| Mantis-Eval (0.2K) | Mantis-Eval | General | 0.2K |

| BLINK (1.9K) | BLINK | General | 1.9k |

| MMMU-mv (test) (0.8K) | MMMU | Scientific Diagram | 0.8K |

Section 3 - Evaluation Results

We conduct extensive evaluation compared with existing open-source models to demonstrate the multimodal capabilities of LLaVA-NeXT-Interleave, which covers a variety of existing and newly curated in/out-domain benchmarks. In the tables below, we exhibit the results across 3 primary scenarios, where our model achieves consistently leading performance for multi-image/frame/view reasoning. For our final solution, LLaVA-NeXT-Interleave (0.5/7/14B) adopts Qwen 1.5-0.5B, 7B and -14B as base LLMs, SigLIP-400M with 384x384 resolutions as the vision encoder, and a two-layer MLP as the projection layer.

Multi-image Evaluation

As reported in the table, the average multi-image performance of LLaVA-NeXT-Interleave surpasses previous open-source models in both in- and out-domain benchmarks. For in-domain evaluation, our model demonstrates significant advantages across various tasks as expected, due to the multi-image instruction tuning with M4-Instruct. For out-domain evaluation, LLaVA-NeXT-Interleave also showcases superior generalization capacity within novel scenarios, e.g., comparable to GPT-4V on Mantis-Eval and BLINK.

| Model | In-domain Evaluation | Out-domain Evaluation | ||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Avg | Newly Curated Benchmarks | Existing Benchmarks | Avg | Newly Curated Benchmarks | Existing Benchmarks | |||||||||||

| Spot the Difference | Image Edit Instruction | Visual Story Telling | Text-rich VQA | Multi-image VQA | Multi-image Puzzle | Q-Bench | NLVR2 | MathVerse-mv | SciVerse-mv | Mantis-Eval |

BLINK | MMMU-mv (test) | ||||

| GPT4V | 39.2 | 12.5 | 11.0 | 10.9 | 54.5 | 52.0 | 17.1 | 76.5 | 88.8 | 57.78 | 60.3 | 66.9 | 62.7 | 51.1 | 47.9 | |

| Open-source LMMs | ||||||||||||||||

| 32.4 | 12.9 | 13.2 | 10.1 | 59.6 | 39.4 | 9.0 | 51.0 | 68.0 | 29.4 | 13.5 | 12.2 | 46.1 | 41.8 | 33.5 | ||

| VPG-C (7B) | 35.8 | 27.8 | 15.2 | 21.5 | 38.9 | 46.8 | 2.4 | 57.6 | 73.2 | 34.5 | 24.3 | 23.1 | 52.4 | 43.1 | 29.4 | |

| Mantis (7B) | 39.6 | 17.6 | 11.2 | 12.5 | 45.2 | 52.5 | 25.7 | 69.9 | 87.4 | 39.3 | 27.2 | 29.3 | 59.5 | 46.4 | 34.1 | |

| Our Models: LLaVA-NeXT-Interleave | ||||||||||||||||

| 0.5B | 43.9 | 34.3 | 21.6 | 29.7 | 63.9 | 54.8 | 35.4 | 52.0 | 67.8 | 33.1 | 13.3 | 12.2 | 45.6 | 39.2 | 28.6 | |

| 7B | 58.6 | 37.1 | 24.3 | 33.1 | 76.1 | 87.5 | 48.7 | 74.2 | 88.8 | 42.8 | 32.8 | 31.6 | 62.7 | 52.6 | 34.5 | |

| 14B | 62.3 | 40.5 | 24.5 | 33.3 | 78.6 | 95.0 | 59.9 | 76.7 | 91.1 | 44.3 | 33.4 | 32.7 | 66.4 | 52.1 | 37.1 | |

Multi-frame Evaluation

For video understanding, we also evaluate our models on NextQA, MVBench, Video Detailed Description (VDD), ActivityNet-QA, and VideoChat-GPT. For the tasks evaluated by GPT, we follow VideoChat-GPT to use the same GPT version.

Compared with previous video-based LMMs under similar model size, LLaVA-NeXT-Interleave achieves superior results on many benchmarks, though not specifically designed for video tasks. We also follow LLaVA-Hound to add DPO training after our M4-Instruct training. After adding DPO, our 7B model achieves SOTA performance on VDD and VideoChat-GPT benchmarks, surpassing previous SOTA model LLaVA-NEXT-Video (34B). This demonstrates the effective temporal understanding and reasoning capabilities of our model across sequential frames.

| Model | NextQA (ACC) |

MVBench | ActivityNet-QA (Acc/Score) |

Video Detailed Description | VideoChat-GPT | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| Correctness | Detail | Context | Temporal | Consistency | Avg | |||||

| Closed-source LMMs | ||||||||||

| GPT-4V | - | - | - | 4.00 | 4.09 | 3.88 | 4.37 | 3.94 | 4.02 | 4.06 |

| Open-source LMMs | ||||||||||

| VideoChatGPT (7B) | - | - | 35.2/2.70 | - | 2.40 | 2.52 | 2.62 | 1.98 | 2.37 | 2.38 |

| Video-LLaVA (7B) | - | - | 45.3/3.30 | - | 2.87 | 2.94 | 3.44 | 2.45 | 2.51 | 2.84 |

| VISTA-LLAMA (7B) | - | - | 48.3/3.30 | - | 2.44 | 2.31 | 2.64 | 3.18 | 2.26 | 2.57 |

| VideoChat2 (7B) | 68.6 | 51.9 | 49.1/3.30 | - | 3.02 | 2.88 | 3.51 | 2.66 | 2.81 | 2.98 |

| LLaMA-VID (7B) | - | 50.2 | 47.4/3.30 | 2.84 | 3.01 | 2.97 | 3.54 | 2.53 | 2.6 | 2.93 |

| LLaVA-NeXT-Video (7B) | - | - | 53.5/3.20 | 3.32 | 3.39 | 3.29 | 3.92 | 2.6 | 3.12 | 3.26 |

| - | - | 60.2/3.50 | 3.72 | 3.64 | 3.45 | 4.17 | 2.95 | 4.08 | 3.66 | |

| - | - | 64.4/3.60 | 3.84 | 3.81 | 3.55 | 4.24 | 3.14 | 4.12 | 3.77 | |

| Our Models: LLaVA-NeXT-Interleave | ||||||||||

| (0.5B) | 59.5 | 45.6 | 48.0/2.84 | 3.25 | 3.12 | 2.97 | 3.62 | 2.36 | 3.27 | 3.07 |

| (7B) | 78.2 | 53.1 | 55.3/3.13 | 3.57 | 3.51 | 3.28 | 3.89 | 2.77 | 3.68 | 3.43 |

| (14B) | 79.1 | 54.9 | 56.2/3.19 | 3.59 | 3.65 | 3.37 | 3.98 | 2.74 | 3.67 | 3.48 |

| DPO (7B) | 77.9 | 52.3 | 55.0/3.13 | 3.90 | 3.99 | 3.61 | 4.24 | 3.19 | 4.12 | 3.83 |

Multi-view Evaluation (3D)

For 3D perception, we adopt 3 in-domain benchmarks to evaluate the 3D spatial understanding performance of our model, which are 3D-assisted Dialog, Task Decomposition, ScanQA-val, ALFRED, and nuScenes VQA. The first two tasks are constructed by 3D-LLM.

Compared to 3D-LLM and Point-LLM with additional point clouds as input, LLaVA-NeXT-Interleave only accepts multi-view images to interpret the 3D world, attaining significantly higher scores for in-door and out-door scenarios.

| Model | In-domain Evaluation | |||||

|---|---|---|---|---|---|---|

| Avg | 3D-assisted Dialogue | Task Decomposition | ScanQA (val) | ALFRED | nuScenes VQA | |

| Closed-source LMMs | ||||||

| Flamingo | 20.5 | 27.9 | 33.2 | 31.1 | 5.3 | 4.9 |

| GPT-4V | 34.6 | 31.2 | 35.4 | 32.6 | 10.3 | 63.7 |

| Open-source LMMs | ||||||

| ImageBind-LLM | 20.8 | 31.4 | 32.3 | 28.6 | 4.7 | 6.8 |

| Point-Bind & Point-LLM | 22.5 | 38.3 | 35.8 | 34.6 | 0.6 | 3.3 |

| 3D-LLM | 22.9 | 39.3 | 37.8 | 35.7 | 1.4 | 0.4 |

| Mantis (7B) | 18.7 | 2.6 | 14.7 | 16.1 | 14.0 | 46.2 |

| Our Models: LLaVA-NeXT-Interleave | ||||||

| 0.5B | 53.0 | 67.2 | 48.5 | 29.3 | 57.0 | 62.8 |

| 7B | 58.2 | 69.3 | 51.4 | 32.2 | 61.6 | 76.5 |

| 14B | 59.2 | 70.6 | 52.2 | 34.5 | 62.0 | 76.7 |

[Fold / Unfold to See Single-image Evaluation (multi-patch)]

Single-image Evaluation (multi-patch)

| Model | LLM | ai2d | chartqa | docvqa | mme* | scienceqa | pope | Avg |

|---|---|---|---|---|---|---|---|---|

| LLaVA-NeXT | 0.5B | 51.65 | 50.16 | 59.13 | 52.83 | 59.96 | 85.36 | 59.80 |

| 52.20 | 52.20 | 59.20 | 52.00 | 60.60 | 86.78 | 60.5 | ||

| LLaVA-NeXT | 7B | 72.73 | 66.28 | 75.57 | 60.96 | 71.10 | 86.90 | 72.30 |

| 73.90 | 67.16 | 75.70 | 63.53 | 72.63 | 86.75 | 73.3 | ||

| LLaVA-NeXT | 14B | 77.49 | 72.12 | 79.96 | 67.68 | 78.88 | 87.32 | 77.2 |

| LLaVA-NeXT-Interleave |

76.52 | 71.24 | 78.91 | 66.21 | 77.44 | 87.90 | 76.40 |

We also add 307k of original LLaVA-NEXT single image data, which makes our model capable of doing single-image tasks. We use anyres training for single-image data, which divides an image into multiple patches, forming another multi-image setting. By adding 307k single-image data (40% of original LLaVA-NEXT data), we maintain single-image performance of LLaVA-NEXT. As single-image data is of high quality and contains diverse instructions, adding single-image data also improves the instruction-following ability and enables task transfer from single-image to multi-image, which is demonstrated in our demo and the ablation study).

Section 4 - Training Techinques

We illustrate two key techniques for training LLaVA-NeXT-Interleave using the M4-Instruct dataset, and provide abalation studies for analysis.

Continue training from single-image model

To better leverage the pre-trained vision-language alignment, we adopt an off-the-shelf LLaVA-NeXT-Image as the base model, which has gone through stage-1 558K image-caption pre-training and stage-2 760K single-image fine-tuning. On top of this checkpoint, we perform the multi-image instruction tuning with the M4-Instruct dataset.

As shown by the ablation below, compared to the training based on the stage-1 pre-training, the 'continue training from stage 2' scheme performs better. In this way, we can inherit the instruction-following capabilities in single-image tasks, and better extend the scope to multi-image, video, and 3D scenarios. In addition, single-image performance cannot be maintained if training directly from stage 1.

| Continue training | Mutl-image | Single-image | ActivityNet-QA (Acc/Score) |

MVBench | Video Detailed Description | VideoChat-GPT | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Mantis-Eval |

BLINK | Q-Bench | NLVR2 | ScanQA | ai2d | chartqa | docvqa | MME* | pope | scienceqa_img | Correctness | Detail | Context | Temporal | Consistency | ||||

| stage-1 | 41.0 | 37.6 | 47 | 54.0 | 27.7 | 46.3 | 38.3 | 47.5 | 47.1 | 85.4 | 59.4 | 44.7/2.17 | 43.0 | 2.96 | 2.97 | 2.87 | 3.49 | 2.42 | 3.14 |

| stage-2 | 45.6 | 39.2 | 52 | 67.8 | 29.3 | 52.2 | 52.2 | 59.2 | 52.0 | 86.8 | 60.6 | 48.0/2.84 | 45.6 | 3.25 | 3.12 | 2.97 | 3.62 | 2.36 | 3.27 |

Mix training for in-the-front and interleaved formats

For interleaved multi-image input, we have two format choices for the positions of image tokens during training. The first is to place all the image tokens in front of the text and refer to each image in the sentence with a special token, aligning with the format in the single-image model. The second preserves the interleaved instruction to put image tokens in the place they are originally in, which extends models to real interleaved data format.

In the below ablation, we train on the multi-image data with different formats. The results indicate that mixing two strategies during training leads to higher performance in both two inference schemes, which provides more flexibility for multi-image input format by users.

| Training Setting |

Inference Setting |

In-domain Evaluation | ||||

|---|---|---|---|---|---|---|

| Avg | Spot the Difference | Visual Story Telling | Text-rich VQA | Q-Bench | ||

| In-the-front format | Interleaved format | 52.88 | 36.8 | 30.5 | 70.1 | 74.0 |

| In-the-front format | 54.27 | 36.6 | 32.8 | 74.7 | 75.3 | |

| Interleaved format | Interleaved format | 55.38 | 37.8 | 32.9 | 76.2 | 76.0 |

| In-the-front format | 52.35 | 36.1 | 29.0 | 72.9 | 71.8 | |

| Mix format | Interleaved format | 56.96 | 38.3 | 32.5 | 78.1 | 76.9 |

| In-the-front format | 56.62 | 37.9 | 32.5 | 78.4 | 76.3 | |

[Fold / Unfold to see more ablations on pooling and data]

Training strategy comparison on video (Pooling vs not pooling)

In this ablation, we study the impact of image token pooling. We train and infer our model under two settings: pooling to 1/4 and not pooling with ShareGPTVideo-Caption+QA(255K) data. Pooling to a 1/4 setting is similar to LLaVA-NEXT-Video, which uses the pooling technique to tradeoff between the number of image tokens and the number of frames. In our experiment, we find that not pooling yields better performance under similar #image tokens.

During training, we sample 10 frames for videos. In this table, we also observe that adding more frames (from 10 to 16) during inference improves performance.

| Train Setting | Inference Setting | Inference #frames | # Image tokens | ActivityNet-QA (Acc/Score) |

Avg | VDD | VideoChat-GPT | ||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Correctness | Detail | Context | Temporal | Consistency | |||||||

| 40 | 40x729x1/4=10x729 | 52.75/3.53 | 3.35 | 3.38 | 3.46 | 3.25 | 3.87 | 2.59 | 3.57 | ||

| 64 | 64x729x1/4=16x729 | 52.7/3.53 | 3.33 | 3.38 | 3.45 | 3.23 | 3.86 | 2.49 | 3.55 | ||

| 10 | 10x729 | 52.9/3.48 | 3.38 | 3.46 | 3.43 | 3.26 | 3.85 | 2.64 | 3.61 | ||

| Not Pooling | Not Pooling | 16 | 16x729 | 54.4/3.51 | 3.41 | 3.46 | 3.48 | 3.28 | 3.87 | 2.74 | 3.62 |

Effects of adding more tasks and data

To study the impact of adding more data, we conduct experiments under different data settings and evaluate the video benchmark. As we gradually add more data, performance consistently improves. VDD means Video Detailed Description.

| Data | Next-QA | Avg | VDD | VideoChat-GPT | ||||

|---|---|---|---|---|---|---|---|---|

| Correctness | Detail | Context | Temporal | Consistency | ||||

| video | 42.60 | 3.40 | 3.46 | 3.47 | 3.27 | 3.87 | 2.74 | 3.61 |

| video + single-image | 67.70 | 3.40 | 3.49 | 3.46 | 3.30 | 3.85 | 2.71 | 3.60 |

| video + multi-image | 77.70 | 3.42 | 3.50 | 3.50 | 3.31 | 3.90 | 2.70 | 3.63 |

| video + both | 78.20 | 3.45 | 3.58 | 3.50 | 3.27 | 3.87 | 2.77 | 3.68 |

Team

-

Feng Li*†: Hong Kong University of Science and Technology

( Work collaborated with ByteDance)

( Work collaborated with ByteDance)

-

Renrui Zhang*: The Chinese University of Hong Kong

(Work collaborated with ByteDance)

(Work collaborated with ByteDance)

-

Hao Zhang*: Hong Kong University of Science and

Technology

(Work collaborated with ByteDance)

(Work collaborated with ByteDance)

-

Yuanhan Zhang: Nanyang Technological University

( Work collaborated with ByteDance)

( Work collaborated with ByteDance)

-

Bo Li: Nanyang Technological University

( Work collaborated with ByteDance)

( Work collaborated with ByteDance)

-

Wei Li: Bytedance

-

Zejun Ma: Bytedance

-

Chunyuan Li: Bytedance

- *Core contributors, †Project lead

Related Blogs

- LLaVA-NeXT: What Else Influences Visual Instruction Tuning Beyond Data?

- LLaVA-NeXT: Stronger LLMs Supercharge Multimodal Capabilities in the Wild

- LLaVA-NeXT: A Strong Zero-shot Video Understanding Model

- LLaVA-NeXT: Improved reasoning, OCR, and world knowledge

- Accelerating the Development of Large Multimodal Models with LMMs-Eval

Citation

@misc{li2024llavanext-interleave,

title={LLaVA-NeXT: Tackling Multi-image, Video, and 3D in Large Multimodal Models},

url={https://llava-vl.github.io/blog/2024-06-16-llava-next-interleave/},

author={Li, Feng and Zhang, Renrui and Zhang, Hao and Zhang, Yuanhan and Li, Bo and Li, Wei and Ma, Zejun and Li, Chunyuan},

month={June},

year={2024}

}

@misc{li2024llavanext-ablations,

title={LLaVA-NeXT: What Else Influences Visual Instruction Tuning Beyond Data?},

url={https://llava-vl.github.io/blog/2024-05-25-llava-next-ablations/},

author={Li, Bo and Zhang, Hao and Zhang, Kaichen and Guo, Dong and Zhang, Yuanhan and Zhang, Renrui and Li, Feng and Liu, Ziwei and Li, Chunyuan},

month={May},

year={2024}

}

@misc{li2024llavanext-strong,

title={LLaVA-NeXT: Stronger LLMs Supercharge Multimodal Capabilities in the Wild},

url={https://llava-vl.github.io/blog/2024-05-10-llava-next-stronger-llms/},

author={Li, Bo and Zhang, Kaichen and Zhang, Hao and Guo, Dong and Zhang, Renrui and Li, Feng and Zhang, Yuanhan and Liu, Ziwei and Li, Chunyuan},

month={May},

year={2024}

}

@misc{zhang2024llavanextvideo,

title={LLaVA-NeXT: A Strong Zero-shot Video Understanding Model},

url={https://llava-vl.github.io/blog/2024-04-30-llava-next-video/},

author={Zhang, Yuanhan and Li, Bo and Liu, haotian and Lee, Yong jae and Gui, Liangke and Fu, Di and Feng, Jiashi and Liu, Ziwei and Li, Chunyuan},

month={April},

year={2024}

}

@misc{liu2024llavanext,

title={LLaVA-NeXT: Improved reasoning, OCR, and world knowledge},

url={https://llava-vl.github.io/blog/2024-01-30-llava-next/},

author={Liu, Haotian and Li, Chunyuan and Li, Yuheng and Li, Bo and Zhang, Yuanhan and Shen, Sheng and Lee, Yong Jae},

month={January},

year={2024}

}

@misc{liu2023improvedllava,

title={Improved Baselines with Visual Instruction Tuning},

author={Liu, Haotian and Li, Chunyuan and Li, Yuheng and Lee, Yong Jae},

publisher={arXiv:2310.03744},

year={2023},

}

@misc{liu2023llava,

title={Visual Instruction Tuning},

author={Liu, Haotian and Li, Chunyuan and Wu, Qingyang and Lee, Yong Jae},

publisher={NeurIPS},

year={2023},

}