LLaVA-NeXT: A Strong Zero-shot Video Understanding Model

On January 30, 2024, we released LLaVA-NeXT, an open-source Large Multimodal Model (LMM) that has been trained exclusively on text-image data. With the proposed AnyRes technique, it boosts capabilities in reasoning, OCR, and world knowledge, demonstrating remarkable performance across a spectrum of image-based multimodal understanding tasks, and even exceeding Gemini-Pro on several image benchmarks, e.g. MMMU and MathVista.

In today’s exploration, we delve into the performance of LLaVA-NeXT within the realm of video understanding tasks. We reveal that LLaVA-NeXT surprisingly has strong performance in understanding video content. The current version of LLaVA-NeXT for videos has several improvements:

- Zero-shot video representation capabilities with AnyRes: The AnyRes technique naturally represents a high-resolution image into multiple images that a pre-trained VIT is able to digest, and forms them into a concantenated sequence. This technique is naturally generalizable to represent videos (consisting of multiple frames), allowing the image-only-trained LLaVA-Next model to perform surprisingly well on video tasks. Notably, this is the first time that LMMs show strong zero-shot modality transfer ability.

- Inference with length generalization improves on longer videos. The linear scaling technique enables length generalization, allowing LLaVA-NeXT to effectively handle long-video beyond the limitation of the "max_token_length" of the LLM.

- Strong video understanding ability. (1) LLaVA-Next-Image, which combines the above two techniques, yields superior zero-shot performance than open-source LMMs tuned on videos. (2) LLaVA-Next-Video, further supervised fine-tuning (SFT) LLaVA-Next-Image on video data, achieves better video understanding capabilities compared to LLaVA-Next-Image. (3) LLaVA-Next-Video-DPO, which aligns the model response with AI feedback using direct preference optimization (DPO), showing significant performance boost.

- Efficient deployment and inference with SGLang. It allows 5x faster inference on video tasks, allowing more scalable serving such as million-level video re-captioning. See instructions in our repo.

Open-Source Release

Results

| Data (Pre-training) |

Data (Post-training) |

Max Sequence Length | Model | Throughput (seconds/video) |

NextQA (WUPS@All) |

ActivityNet-QA (Acc/Score) |

Video Detailed Description (Score) |

VideoChat-GPT (Score) |

|||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Training | Inference | Correctness | Detail | Context | Temporal | Consistency | |||||||

| Proprietary | |||||||||||||

| N/A | N/A | - | - | GPT-4V (1106) | - | - | - | 4.00 | 4.09 | 3.88 | 4.37 | 3.94 | 4.02 |

| N/A | N/A | - | - | Flamingo | - | 26.7 | 45.3 | - | - | - | - | - | - |

| N/A | N/A | - | - | Gemini Pro | - | 28.0 | 49.8 | - | - | - | - | - | - |

| N/A | N/A | - | - | Gemini Ultra | - | 29.9 | 52.2 | - | - | - | - | - | - |

| Open-Source SoTA | |||||||||||||

| 558K | 765K | 4096 | 4096 | VideoChatGPT (7B) | - | - | 35.2/2.7 | - | 2.40 | 2.52 | 2.62 | 1.98 | 2.37 |

| 1260K | 765K | 4096 | 4096 | Video-LLaVA (7B) | - | - | 45.3/3.3 | - | 2.87 | 2.94 | 3.44 | 2.45 | 2.51 |

| 558K | 765K | 4096 | 4096 | VISTA-LLAMA (7B) | - | - | 48.3/3.3 | - | 2.44 | 2.31 | 2.64 | 3.18 | 2.26 |

| 35M | 1.9M | 4096 | 4096 | VideoChat2 (7B) | - | - | 49.1/3.3 | - | 3.02 | 2.88 | 3.51 | 2.66 | 2.81 |

| 790K | 765K | 4096 | 4096 | LLaMA-VID (7B) | 20 | 21.03 | 47.4/3.3 | 2.84 | 3.01 | 2.97 | 3.54 | 2.53 | 2.60 |

| LLaVA-NeXT | |||||||||||||

| 558K | 760K | 4096 | 4096 | LLaVA-NeXT-Image (7B)* | 4 | 26.00 | 41.6/2.8 | 2.64 | 2.29 | 2.38 | 2.83 | 2.41 | 2.47 |

| 558K | 760K | 4096 | 4096 | LLaVA-NeXT-Image (7B) | 16 | 26.88 | 53.8/3.2 | 2.76 | 2.94 | 2.91 | 3.43 | 2.23 | 3.00 |

| 558K | 760K | 4096 | 8192 | LLaVA-NeXT-Image (7B) | 33 | 27.33 | 53.5/3.2 | 3.12 | 3.05 | 3.12 | 3.68 | 2.37 | 3.16 |

| 558K | 860K | 4096 | 8192 | LLaVA-NeXT-Video (7B) | 37 | 26.90 | 53.5/3.2 | 3.32 | 3.39 | 3.29 | 3.92 | 2.60 | 3.12 |

| 558K | 860K+17K | 4096 | 8192 | LLaVA-NeXT-Video-DPO (7B) | 37 | 26.92 | 60.2/3.5 | 3.72 | 3.64 | 3.45 | 4.17 | 2.95 | 4.08 |

| 558K | 760K | 4096 | 4096 | LLaVA-NeXT-Image (34B) | 28 | 28.20 | 55.4/3.3 | 3.00 | 3.21 | 3.06 | 3.49 | 2.53 | 3.31 |

| 558K | 760K | 4096 | 8192 | LLaVA-NeXT-Image (34B) | 45 | 28.33 | 55.6/3.3 | 3.20 | 3.29 | 3.23 | 3.83 | 2.51 | 3.47 |

| 558K | 860K | 8192 | 8192 | LLaVA-NeXT-Video (34B) | 45 | 27.34 | 58.8/3.4 | 3.48 | 3.48 | 3.37 | 3.95 | 2.64 | 3.28 |

| 558K | 860K+17K | 8192 | 8192 | LLaVA-NeXT-Video-DPO (34B) | 45 | 27.30 | 64.42/3.6 | 3.84 | 3.81 | 3.55 | 4.24 | 3.14 | 4.12 |

✨ Highlights:

- SoTA Performance! Without seeing any video data, LLaVA-Next demonstrates strong zero-shot modality transfer ability, outperforming all the existing open-source LMMs (e.g., LLaMA-VID) that have been specifically trained for videos. Compared with proprietary ones, it achieves comparable performance with Gemini Pro on NextQA and ActivityNet-QA.

- Strong length generalization ability. Despite being trained under the sequence length constraint of a 4096-token limit, LLaVA-Next demonstrates remarkable ability to generalize to longer sequences. This capability ensures robust performance even when processing long-frame content that exceeds the original token length limitation.

- DPO pushes performance. DPO with AI feedback on videos yields significant performance gains.

Qualitative Results

Video Detailed Caption Tasks

User: Please provide a detailed description of the video, focusing on the main subjects, their actions, and the background scenes.

Prompt for T2V: A realistic image of clouds forming the word "SORA"

LLaVA-NeXT-Video

The video you've provided appears to be a digital artwork that features a large, fluffy white cloud in the sky with the word \"SORA\" written across it. The cloud is the main subject of the video, and it is positioned against a clear sky with a few wispy clouds in the background. The cloud itself is dense and appears to be a cumulus cloud, which is characterized by its fluffy, rounded shape and white color, suggesting that it could be a cloud that is forming or about to rain. The background is a gradient of blue, indicating that the sky is clear and possibly sunny, with a few wispy clouds scattered around, which adds depth to the scene. The overall atmosphere of the video is serene and somewhat dreamlike, with the cloud's formation and the clear sky creating a peaceful and somewhat surreal visual effect.

Video QA Tasks

User: Which part of the video goes against common sense?

Prompt for T2V: Step-printing scene of a person running, cinematic film shot in 35mm.

LLaVA-NeXT-Video

The video you've provided appears to show a person running on a treadmill, but the unusual aspect is that the person's legs are not aligned with the treadmill's belt. This defies common sense because when running on a treadmill, one would expect the person's legs to be in sync with the belt's motion, either moving forward or backward in a coordinated manner. In this video, the person's legs are positioned as if they are running in a different direction or at a different pace than the belt, which is not possible in reality and thus goes against common sense. This suggests that the video may have been manipulated or is a composite of two different scenes to create an illusion.

Technical Insights

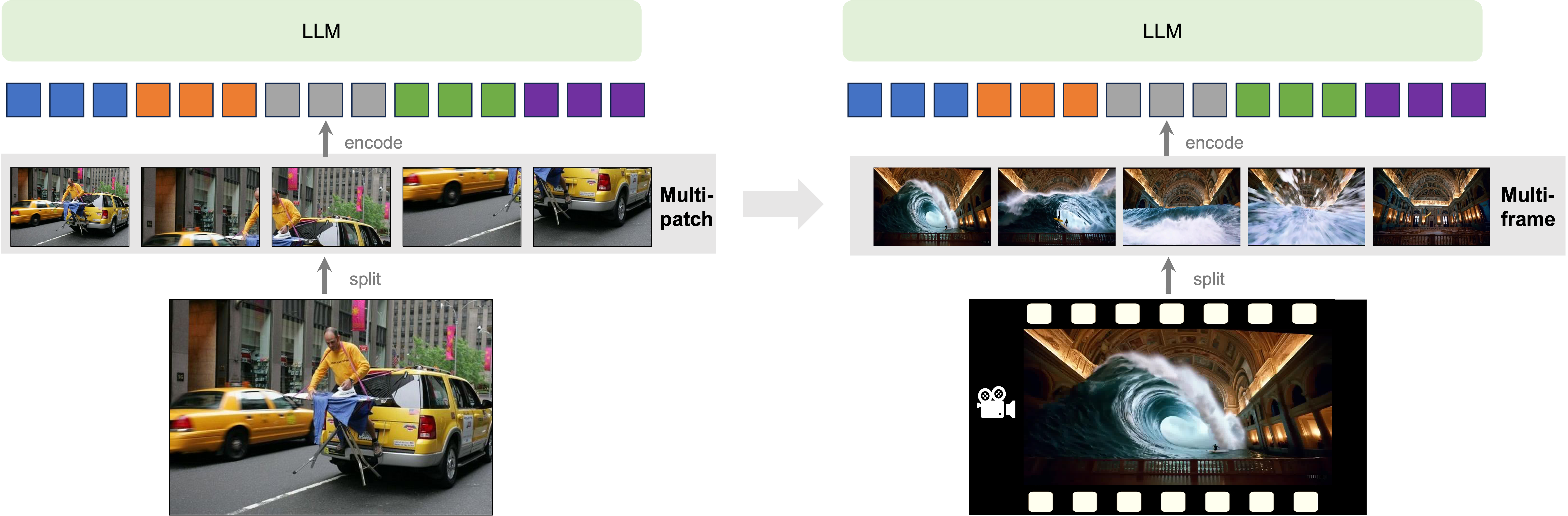

(1) AnyRes: From multi-patch to multi-frame

The AnyRes algorithm, introduced in LLaVA-NeXT, achieves an optimal balance between performance efficiency and operational costs to deal with images of any high-resolution. It segments the image into a grid of sub-images with various configurations, such as {2x2, 1x{2,3,4}, {2,3,4}x1}.

Illustration that AnyRes digests a set of image as a sequence of concatenated visual tokens, allowing unified image and video input, which natually suppots the evolution from multi-image to multi-frame

With minor code adjustments, LLaVA-NeXT can process N video frames arranged in a {1xN} grid. Assuming each frame comprises 24x24 tokens, the total token count for a video would be 24x24xN. However, considering the "max_token_length" limit of 4096 for the LLM, it is crucial to ensure that 24x24xN + the number of text tokens < 4096 to avoid nonsensical outputs. This necessitates a careful balance between the tokens per frame and the total number of frames. For example, applying spatial pooling with a stride of 2 reduces the tokens per frame from 24x24 to 12x12, accommodating up to 16 frames. Our findings indicate that a configuration of 12x12 tokens across 16 frames yields optimal performance. However, 16 frames may not sufficiently capture the essence of most videos. Next, we focus on enabling LLaVA-NeXT to handle more frames.

(2) Length generalization: From multi-frame to long-video.

Inspired by recent advance in dealing with long sequences in LLMs, such as the implementation of linear scaling in rotary position embeddings (RoPE), we apply a similar scaling approach in LLaVA-NeXT. By introducing a scaling factor of 2, for instance, we effectively double the "max_token_length" capacity of the model, enabling it to process sequences up to 8192 tokens. With this technique, LLaVA-NeXT can process up to 56 frames with a per frame token count of 12x12, significantly expanding the model's applicability in analyzing longer video sequences.

Illustration of length generalization to deal with a long sequence with much more frames, where the sequence length in inference can be longer than the sequence length in training.

This adaptation of linear scaling not only facilitates the processing of a greater number of frames but also opens new avenues for LLaVA-NeXT's application in comprehensive video analysis, setting a precedent for future research in the field.

(3) Direct Preference Optimization from AI Feedback

Recent advancements in reinforcement learning from human feedback (RLHF) have been effective in guiding LLMs towards generating more honest, helpful, and harmless content. Their effectiveness in multimodal contexts remains limited. The critical obstacle lies in (1) developing a robust reward system capable of distinguishing preferred responses from less preferred ones, and (2) acquiring high-quality preference data from humans is both costly and labor-intensive, especially for video inputs with multiple frames. As a cost-efficient alternative, AI feedback using LLM reward is considered in our recent study LLaVA-Hound. DPO is used to train LMM, based on the preference data generated by LLM, where videos are represented with their detailed captions as supporting evidence. Following the same recipe, we explore DPO based on the stronger SFT LMM LLaVA-NeXT-Video, leading to the final model LLaVA-NeXT-Video-DPO.

The results are presented in the main table at the beginning of the blog, showcasing DPO's significant improved performance gain, sometimes even surpassing the gains from supervised fine-tuning with video data. This highlights the promising future of exploring training methods that utilize AI or human feedback.

[Fold / Unfold] to see the examples with DPO

We illustrate two examples to demonstrate the superiority of DPO. Texts of interest are highlighted in blue, while parts that might contain hallucinations are marked in red. LLaVA-NeXT-Video-DPO reduces hallucinations, and follows the instructions very well, as it responds clearly to different parts of the instructions in separate sections. This resonates with the objective of alignment research: to better follow human intent. Note that we utilize Genimi-Pro, which supports video input, to assess the quality of the model's responses with and without DPO in the last column.

User: Please provide a detailed description of the video, focusing on the main subjects, their actions, the background scenes, and the temporal transitions.

LLaVA-NeXT-Video-DPO

The video depicts a dynamic and surreal scene where the action of surfing is juxtaposed with an indoor setting. In the foreground, there are two surfers riding waves, one slightly ahead of the other, maintaining their balance on surfboards. The surfer in the foreground is skillfully navigating the crest of a wave, while the one behind is positioned on the face of the wave, both displaying athletic postures typical of surfing. The waves are large and crashing, with the wave in the background creating a dramatic arc that suggests motion and power.

The background is an opulent interior space with high vaulted ceilings, ornate detailing, and classical architecture, including arches and what appears to be marble or stone work. The lighting is warm, with a golden hue that contrasts with the cool tones of the water. The architecture suggests a grand hall or a space designed for public gatherings or performances, possibly inspired by classical or neoclassical styles.

The temporal transition in the video is interesting as it captures a moment of action (the surfers riding the wave) within a stationary, non-moving environment (the interior space). This creates a surreal effect, blending the dynamic motion of the ocean with the static elegance of a building interior. The image is a blend of two disparate subjects—the exhilarating sport of surfing and the serene grandeur of architectural design—into a single, captivating composition.

LLaVA-NeXT-Video

The video depicts a large wave crashing inside a building, with a surfer riding the wave. The surfer is positioned in the center of the frame, skillfully navigating the wave's crest. The wave is massive, filling the majority of the space from the top to the bottom of the frame, with its white foam and water spray creating a dynamic and powerful visual effect. The background of the scene is a grand hall with high ceilings and ornate architecture, featuring arches and columns that add a sense of grandeur to the setting. The lighting is dim, with the focus on the surfer and the wave, casting shadows and highlighting the contours of the wave's movement. The camera captures the surfer from a low angle, emphasizing the height of the wave and the surfer's skill in maintaining balance. As the wave begins to break, the surfer is seen riding it towards the right side of the frame, creating a sense of motion and direction. The video transitions from a close-up of the surfer to a wider shot, showing the full scale of the wave and the grand hall. The sound of the crashing wave is prominent, adding to the immersive experience. The video concludes with a shot of the surfer disappearing from view as the wave subsides, leaving the grand hall empty and still.

Evaluation and Comments

Description A: LLaVA-NeXT-Video-DPO

Description B: LLaVA-NeXT-Video

Comparison:

Description A provides a more comprehensive and evocative description of the video, capturing the surreal juxtaposition of surfing with the opulent indoor setting. It highlights the dynamic motion of the surfers and the contrasting serenity of the architecture, creating a unique and captivating image.

Description B, on the other hand, focuses more on the surfer riding the wave within the grand hall. While it describes the visual elements and motion of the surfer, it lacks the depth and nuance of Description A in capturing the surreal and immersive nature of the scene.

Conclusion:

Description A is the better description for this video as it provides a more complete and engaging portrayal of the surreal scene, capturing the blend of dynamic action and serene grandeur, while also highlighting the unique juxtaposition of surfing with the opulent indoor setting.

More Empirical Explorations

(1) How to represent videos? Configurations: (# Tokens/Frame, # Frames)

As discussed in the "From Multi-Patch to Multi-Frame" section, navigating the "max_token_length" constraint of 4096 for the Large Language Model (LLM) necessitates a strategic balance between the number of tokens allocated per frame and the total frame count included in the analysis. Our exploration led to an optimal setup: configuring each frame to contain 12x12 tokens and sampling 16 frames for each video.

Further amplifying the model's capabilities, "linear scaling" emerges as a pivotal tool in exploring configurations that accommodate more image tokens, thus enabling longer inference tokens. The table below encapsulates our findings, illustrating that configurations enabled by "linear scaling" significantly enhance performance by allowing for longer sequences of inference tokens.

| Model | Pooling Stride | # Tokens/Frame | # Frames | Video Detailed Description (Score) |

|---|---|---|---|---|

| LLaVA-NeXT-Image-7B |

Max inference tokens=4096 | |||

| 4 | 6X6 | 32 | 2.73 | |

| 4 | 6X6 | 64 | 2.24 | |

| 2 | 12X12 | 16 | 2.76 | |

| 1 | 24X24 | 4 | 2.71 | |

| Max inference tokens=8192 (with linear scale factor=2) | ||||

| 2 | 12X12 | 32 | 3.12 | |

| 1 | 24X24 | 8 | 3.08 | |

| Max inference tokens=16384 (with linear scale factor=4) | ||||

| 2 | 12X12 | 64 | 3.12 | |

| 1 | 24X24 | 16 | 3.16 | |

(2) How to fine-tune on videos?

It is natural to further tune the model on video data for performance boost. Our analysis reveals that a mixed training regimen of video and image data is essential for optimizing the performance of "LLaVA-NeXT-Video". Specifically, we consider different strategies: (1) Continual fine-tuning LLaVA-NeXT stage-2 checkpoint on video data only; (2) Starting from a LLaVA-NeXT stage-1 checkpoint, the model is tuned on the joint data of image and video in Stage-2, where data types in each batch can be split (each batch only contains one type) or mixed (each batch contains both types). It becomes evident that training with batches that mix image and video data performs the best, while other strategies even perform worse than LLaVA-NeXT-Image models. This outcome emphasizes the importance of mixing video and image data in the training process to enhance the model's proficiency in video-related tasks.

| Model | Training recipes | Pooling Stride | #Tokens/Frame | # Frames | Video Detailed Description (Score) |

|---|---|---|---|---|---|

| LLaVA-NeXT-Image-7B | - | 2 | 12X12 | 32 | 3.14 |

| LLaVA-NeXT-Video-7B | Continual fine-tuning on videos | 3.02 | |||

| Joint video-image: Batch data split | 3.00 | ||||

| Joint video-image: Batch data mixed | 3.32 |

More Examples

Team

- Yuanhan Zhang: Nanyang Technological University

(Work collaborated with ByteDance/TikTok)

(Work collaborated with ByteDance/TikTok) - Bo Li: Nanyang Technological University

(Work collaborated with ByteDance/TikTok)

(Work collaborated with ByteDance/TikTok) - Haotian Liu: University of Wisconsin-Madison

- Yong Jae Lee: University of Wisconsin-Madison

- Liangke Gui: Bytedance/Tiktok

- Di Fu: Bytedance/Tiktok

- Jiashi Feng: Bytedance/Tiktok

- Ziwei Liu: Nanyang Technological University

- Chunyuan Li: Bytedance/Tiktok

Acknowledgement

- We thank Kaichen Zhang, Kairui Hu, Fanyi Pu, for the building of video benchmark.

- We thank Lianmin Zheng, for the integration of LLaVA-Next-Video to SGLang.

Related Blogs

- LLaVA-NeXT: Stronger LLMs Supercharge Multimodal Capabilities in the Wild

- LLaVA-NeXT: Improved reasoning, OCR, and world knowledge

- Accelerating the Development of Large Multimodal Models with LMMs-Eval

Citation

@misc{zhang2024llavanextvideo,

title={LLaVA-NeXT: A Strong Zero-shot Video Understanding Model},

url={https://llava-vl.github.io/blog/2024-04-30-llava-next-video/},

author={Zhang, Yuanhan and Li, Bo and Liu, haotian and Lee, Yong jae and Gui, Liangke and Fu, Di and Feng, Jiashi and Liu, Ziwei and Li, Chunyuan},

month={April},

year={2024}

}

@misc{liu2024llavanext,

title={LLaVA-NeXT: Improved reasoning, OCR, and world knowledge},

url={https://llava-vl.github.io/blog/2024-01-30-llava-next/},

author={Liu, Haotian and Li, Chunyuan and Li, Yuheng and Li, Bo and Zhang, Yuanhan and Shen, Sheng and Lee, Yong Jae},

month={January},

year={2024}

}

@misc{liu2023improvedllava,

title={Improved Baselines with Visual Instruction Tuning},

author={Liu, Haotian and Li, Chunyuan and Li, Yuheng and Lee, Yong Jae},

publisher={arXiv:2310.03744},

year={2023},

}

@misc{liu2023llava,

title={Visual Instruction Tuning},

author={Liu, Haotian and Li, Chunyuan and Wu, Qingyang and Lee, Yong Jae},

publisher={NeurIPS},

year={2023},

}