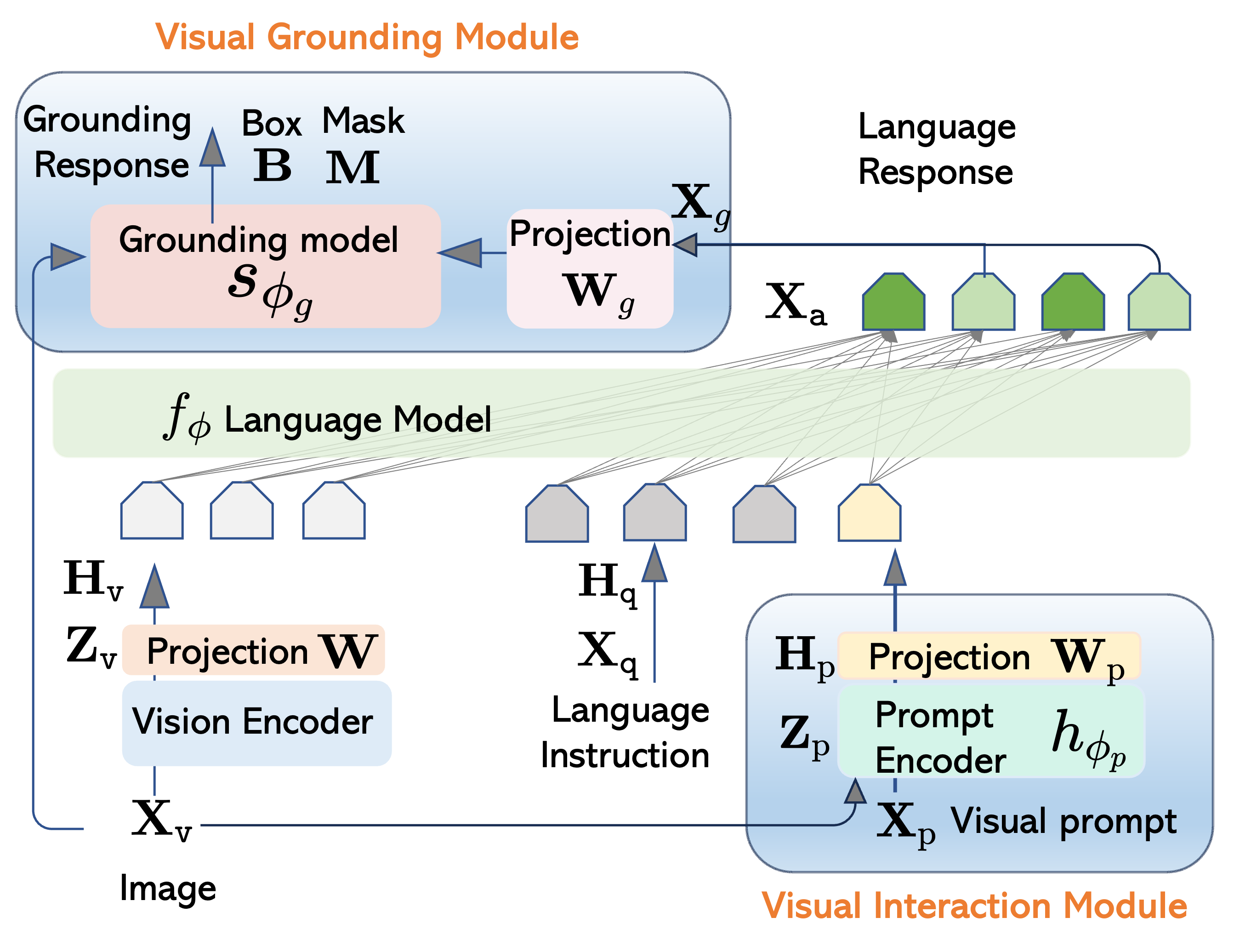

LLaVA-Grounding maintains

Prompt encoder.

@misc{zhang2023llavagrounding,

title={LLaVA-Grounding: Grounded Visual Chat with Large Multimodal Models},

author={Hao Zhang and Hongyang Li and Feng Li and Tianhe Ren and Xueyan Zou and Shilong Liu and Shijia Huang and Jianfeng Gao and Lei Zhang and Chunyuan Li and Jianwei Yang},

year={2023},

booktitle={arXiv}

}

This website is adapted from Nerfies, licensed under a Creative Commons Attribution-ShareAlike 4.0 International License. We thank the LLaMA team for giving us access to their models, and open-source projects, including Alpaca and Vicuna.

Usage and License Notices: The data, code and checkpoint is intended and licensed for research use only. They are also restricted to uses that follow the license agreement of LLaVA, CLIP, LLaMA, Vicuna and GPT-4. The dataset is CC BY NC 4.0 (allowing only non-commercial use) and models trained using the dataset should not be used outside of research purposes.

Related Links:

[REACT]

[GLIGEN]

[Computer Vision in the Wild (CVinW)]

[Insutrction Tuning with GPT-4]